Nvidia’s Ramp – Volume, ASP, Cloud Pricing, Margins, EPS, Cashflow, China, Competition

Unraveling the Mixed Signals in the Supply Chain from AI Head-Fakes

The incredible demand to build out AI capacity has been primarily limited by Nvidia’s lack of ability to increase production. We’ve detailed why (CoWoS and HBM), the 28 upstream suppliers who are enabling increased production of GPUs, as well as how many GPUs Nvidia is ramping up to produce. The topic we want to cover today is shipments and the average sales price of H100 GPUs going forward. We will also touch on MI300, L40, and Nvidia’s next-generation GPU. We will walk through our model and what this means for Nvidia’s units, revenue, earnings, and cash flow through FY 2025. Additionally, we will discuss infrastructure pricing, cloud pricing next year, and procurement strategy. Lastly, we will talk about the demand side, China, and the durability of the current overinvestment cycle.

What other companies besides Nvidia in the supply chain have been reporting so far has been a mixed bag of excitement around AI and GPU demand coupled with a lack of real tangible revenue guidance. Partially this has been dampened by softness in other end markets, especially PC, smartphone, and generally China. Several companies that were bid up as AI winners, were disappointed as they showed that their AI revenue/torque is just not enough to offset weakness elsewhere in their business. We call this group of companies the AI head-fakes.

For example, Super Micro disappointed lofty expectations after a >300% share price run-up this year with revenue guidance for the next quarter being flat. Their ramp in AI revenue isn’t being shown by them or many other AI head-fakes and it is violating the AI ramp narrative. The results so far are contradictory to what Nvidia is believed to be doing. This is fuel for those who have doubts about the whole AI story. For some of the AI head-fakes, it is simply that they are trying to claim they are AI winners when they aren’t actually beneficiaries, or they are very commoditized. For others, it is simply the timing of ramp-up, shipments, and revenue recognition.

Let’s talk through the supply chain for this latter example. If Nvidia placed orders in March, even if TSMC started production right away, there is a multi-month process of processing polished silicon wafers into wafers full of 4nm chips. Next, there is the CoWoS packaging supply chain, and many layers of test, as described here. Nvidia then takes these completed H100 chips from TSMC and consigns them to Foxconn which makes the H100 SXM modules. Then these are shipped to Wistron who has consigned the modules and integrates 8 of them alongside 4 NVSwitches, cooling, and delivering a completed and tested H100 baseboard.

Finally, Nvidia gets to ship these GPU baseboards to server manufacturers and recognize revenue in July, 4-5 months after production started. Now the server OEM/ODM needs to build the server, and in many cases help install it in the datacenter on another continent. Super Micro in this example may only get to recognize revenue as late as October which won’t show up until they release their FY Q2 2024 earnings report in January next year. Coreweave can only start renting these servers in November of 2023. This example is fictitious to exaggerate the challenges of revenue recognition points as well as the different fiscal reporting periods of each company. The supply chain is generally faster in each individual step.

Before we dive into the majority of this report’s contents, let’s just start with an eye-popping stat from our model below, and one you can easily quote when you share.

Nvidia's profit margins and cash flow conversion are so high that by the end of next year, the cash on its balance sheet will be substantial enough to equal half of Intel's current market valuation.

Stay tuned for what we believe they are will do with that mountain of cash.

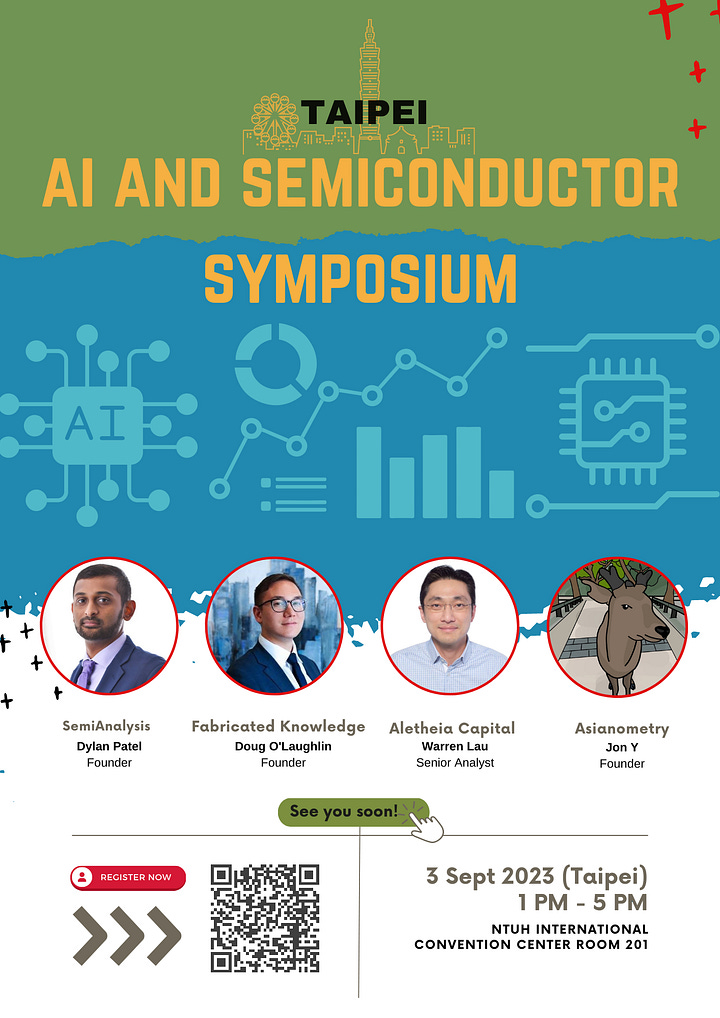

We will be hosting AI and Semiconductor Symposium in Taiwan with on September 3rd with many foundry, packaging, ODM suppliers, and buyside in attendance. Topics covered include the future of AI infrastructure, the next-generation model architectures employed by Google’s Gemini/Future OpenAI GPT, Chinese analog/power semi fab buildouts, and Nvidia’s current acquisition target. Speakers include multiple members of SemiAnalysis, FabricatedKnowledge, Asianometry, Alethia Capital, and a special secret guest. Register here if you can come!

Nvidia Estimates – Sniff Testing The Hype

The forecast we provide comes from multiple vectors. One is the CoWoS and HBM supply chain. Another is the end market demand/capex plans from various hyperscalers, startups, and enterprises. Another is from real estate/power data for physical DC buildouts. Lastly is from the OEM/ODM angle.